Microsoft Sentinel formerly known as Azure Sentinel, is a technology I came into contact with when I was working on a security project for a client who had a predominantly Azure based cloud environment. My remit was to review Microsoft Sentinel to see if it was fit for purpose and could be used by my client.

So, what is Microsoft Sentinel? Microsoft Sentinel is a Security Incident and Event Management (SIEM) as well as a Security Orchestration Automation and Response (SOAR) service. The service has been developed by Microsoft, originally for their cloud offering Azure, but now can be used for other cloud environments as well as on-premises environments like company managed data centers.

Previously known as Azure Sentinel, Microsoft Sentinel has matured as a SIEM and SOAR product, leading to far greater adoption. It provides the ability to collect logs, detect security anomalies within the logs, investigate the security anomalies for potential security threats and responding by determining the severity of the threats, providing alerts, which can be used by a Security Operations Center (SOC) analysts to create and manage associated incidents.

Microsoft Sentinel uses log data consisting of security logs and events which it ingests from the Log Analytics Workspaces associated with the Microsoft Sentinel instance. This log data can then be analyzed for threats by Microsoft Sentinel for any security anomalies and thereby potential threats.

The data comes from assets like powerful computers known as servers, network devices to virtual resources within Azure and other cloud providers like Amazon Web Servers (AWS) to Oracle Cloud Infrastructure (OCI).

Log Analytics Workspaces

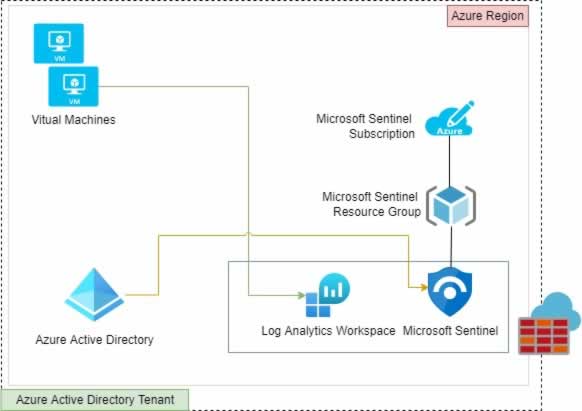

Microsoft Sentinel needs to ingest data to be able to analyze it and having connectivity to multiple data sources is not an efficient way for Microsoft Sentinel to work. Therefore Microsoft Sentinel relies on using a Log Analytics Workspace as it’s logical storage. Where the collected data not only from within the Azure region but from other sources (data centers, other cloud providers, SaaS) is sent from the systems and services.

The Log Analytics Workspace has minimal performance limits on the amount of data used or the number of services, data sources connecting to it. However, there can be charges involved in collecting or transferring data to the workspaces. These charges double when a Log Analytics Workspace is added to Microsoft Sentinel.

As all data collected in that workspace is then subject to two sets of charges, the Microsoft Sentinel charges along with Log Analytics Workspaces charges. It makes sense to ensure the data being ingested by the Log Analytics Workspace and Microsoft Sentinel is the minimal amount required to be able to analyze effectively, as irrelevant data won’t increase the ability to scrutinize the data for threats but will just increase the costs of ingestion.

I tend to advise my clients, to separate the security and operational data into different workspaces, as the operational data doesn’t really add value to detecting threats. But this type of data, especially performance data is still relevant for other non security related purposes and does still need to be collected. By adopting this approach, I can help my client reduce their Microsoft Sentinel ingestion charges by keeping only the required security type data in scope.

Microsoft Sentinel can deal with multiple Log Analytics Workspaces, as long as the workspaces are part of the same Azure Active Directory tenant. Otherwise, Azure Lighthouse will be required to use data from Log Analytic Workspaces in a different Azure Active Directory tenant.

Just for information, an Azure Active Directory Tenant is an identity and access management (IAM) boundary for applications and resources used within an organisation. With an identity in this context an object in Azure Active Directory (AAD), that has the ability to be authenticated and then authorized to access resources within the tenant. Identities can human like employees and machine based, like computers, applications to services.

Analytic logs vs basic logs vs archive logs

There are two types of logs that can be ingested into a log analytics workspace, including:

- Basic logs

- Analytics logs

The analytics logs contain additional information that is useful for threat detection whilst basic logs don’t have the level of detail that can be used primarily as a log source for security incidents or for alerting.

Basic log data can be used in cases where correlation is required during an investigation into an incident, or during threat hunting type exercises, as it can help in drawing conclusions. Analytic logs unlike basic logs can be interrogated with full level Kusto Query Language (KQL) queries, to analyze in greater detail more efficiently.

The data retention period, that is the time the logs are kept before they are automatically deleted varies between basic logs and analytic logs.

| Feature | Basic logs | Analytic logs | Details |

| Default retention period | 8 days | 31 to 90* days | * depends on the log sources |

| Extend retention period | No | Yes | Basic logs are fixed at 8 days |

| Extended retention period | N/A | Up to 2 years | Depending on the log sources, the retention period for analytic logs can be as long as 2 years |

Logs can be kept longer than the maximum retention period available but there is a cost, both financially and functionally. As analytic logs can be archived, that is they can be put into a special type storage (archived) and when they are needed again, they can be brought back and used as they were used before they were archived.

Logs that are archived cannot be used fully, that is any operations used against them like running KQL queries to find threats and patterns. This is why they need to be brought back out of their archived state to be used. They can be used in a limited fashion:

- Limited searching on archive logs can be done but this can only be done over a single year period and having older archives would require breaking the search into separate yearly searches, so 7 years of archive data would require 7 separate searches.

- Searching is limited in the time taken and it can take up to 24 hours in some cases to complete the search.

Restoring archived logs results in a new table created within the log analytics workspace, appended with the a special restored table marker and this restored table does not have any default retention period. Therefore it’s vitally important to ensure the restored table is only used as long as it’s needed and then it is deleted, to save on additional charges to keep the restored table available for use.

There are alternatives to archiving without sacrificing the ability to analyze the analytic logs and these include Azure Data Explorer (ADX) and Azure Blob storage. By putting the analytic logs in these storage services, these logs can still be analyzed using KQL and real-time queries can also be performed, without having to wait long periods for the searches to complete.

Data Connectors

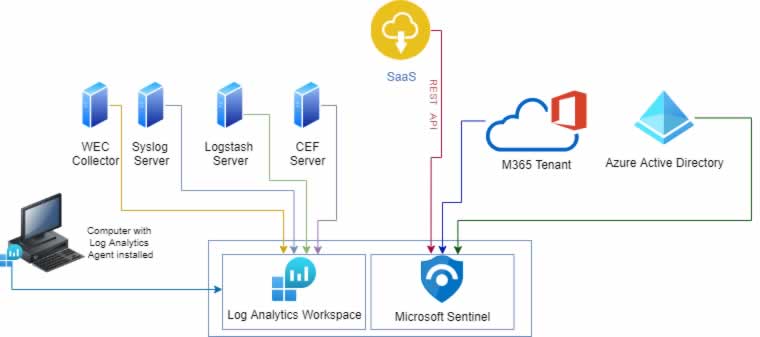

Microsoft Sentinel Data Connectors, including agents will used to collect security logs and events from the systems and services within Azure and from other cloud providers, SaaS applications and on-premises data centers.

Microsoft Native Data Connectors

The native log sources from Microsoft services like Azure Services, Dynamics 365, Office 365 to Microsoft Defender security services can be obtained easily by Microsoft Sentinel. Services like the following:

- Azure Active Directory

- Azure Distributed Denial of Service (DDoS)

- Azure Kubernetes Service (AKS)

- Web Application Firewall (WAF)

In most cases, there is no need to pay any ingestion charges for these native services, as the data already resides within the same tenant.

A Microsoft 365 Tenant provides online services like Microsoft Office and Microsoft Teams as well as cloud based services owned by Microsoft such as Exchange Online and OneDrive. Security logs and events from the M365 Tenants can be collected by Microsoft Sentinel using an integration with the Microsoft 365 Management API.

Log Analytics Agent

The Log Analytics Agent can be installed on devices to send logs to an associated Log Analytics Workspace. This method can be used when the number of devices is low as it’s easier to manage but generally across larger organizations, where the numbers of competing agents is higher on a device, like anti-virus, performance monitoring to data loss prevention agents.

It may make sense to have a centralized server with the log analytics agent installed on it, where all the logs from the other devices can be sent. This way, there’s no need to install the log analytics agent on all the devices, protecting these devices from additional performance impact from the agent, as multiple agents doing different things on a device, can lead to higher than normal processing power usage.

Syslog Forwarder

A Syslog Forwarder can be set up to collect syslog format logs from servers like Linux based servers and devices like networking products such as firewalls to routers. The syslog forwarder will generally need the log analytics agent installed, to make sure it can send log data to the log analytics workspace for Microsoft Sentinel.

A log parser will generally be needed to be created within Microsoft Sentinel to deal with the information within the syslog logs to make sure the relevant information is being ingested.

Common Event Forwarder (CEF)

The CEF log forwarder uses a specialized log format, the common event format that can be easily ingested by Microsoft Sentinel. There are a number of CEF templates available that can be customized for use with CEF log forwarders, allowing for logs to be efficiently dealt with to ensure only the required information is being sent to Microsoft Sentinel for ingestion.

Logstash Collector

The Logstash collector is another collector with log optimization capabilities, where only the required fields of information from the logs is collected. This is then sent to the Microsoft Sentinel Log Analytics Workspace.

Windows Event Collectors (WEC)

Windows Event Forwarding can be set up on Windows devices like servers, workstations, desktops to laptops to send their security events to a central server, the WEC server, using group policy settings on the devices. The security events from the WEC server can then sent to the log analytics workspace associated with the Microsoft Sentinel instance.

REST API integration

Some systems and services especially those Software as a Service (SaaS) can send their log data directly to the log analytics workspace, where an id and secret for the SaaS service is used to authenticate and pull log data back to the log analytics workspace over a secure connection (encrypted).

SOAR

Microsoft Sentinel SOAR capability can be used to provide an automated response, where compatible firewalls for example, that are being attacked on Secure Shell (SSH) ports, can have the associated port automatically closed by the SOAR automation.

Microsoft Sentinel can use Playbooks to provide an automated response to a threat. The playbook itself is a workflow, which are a set of tasks to go through to deal with the threat. Different playbooks are available for different scenarios and even different systems, along with templates to create your own playbooks.

A playbook will use Azure Logic Apps managed connectors and/or Azure Logic Apps customer connectors to connect to systems and services, be they Microsoft based or not. These connectors give the playbook the ability to run it’s component tasks on the system or the service.

Threat Intelligence

Threat Intelligence is a key component of discovering potential threats including indicators of compromise (IoC). Microsoft Sentinel can use external intelligence sources from third-party threat intelligence organizations as well as from STIX/TAXII feeds (open source and premium).

So, known malicious internet protocols (IPs) or domain name service (DNS) names intelligence can quickly allow Microsoft Sentinel to be wary of connections made from these IP and DNS addresses. To use STIX/TAXII security intelligence feeds, an appropriate threat intelligence data connector is required for Microsoft Sentinel, as the feed itself is an ingest protocol.

Conclusion

Microsoft Sentinel is a Security Incident and Event Management (SIEM) service with Security Orchestration Automation and Response (SOAR) service. Whereby it can analyze log data for potential threats and can respond using automated workflows known as playbooks to deal with the threat.

A Log analytics workspace is required to store the collected log data from different systems and services. This data is ingested by Microsoft Sentinel and analyzed for threats using artificial intelligence to spot patterns and threat intelligence feeds, which contain information about threats in the field as well as historical threats.